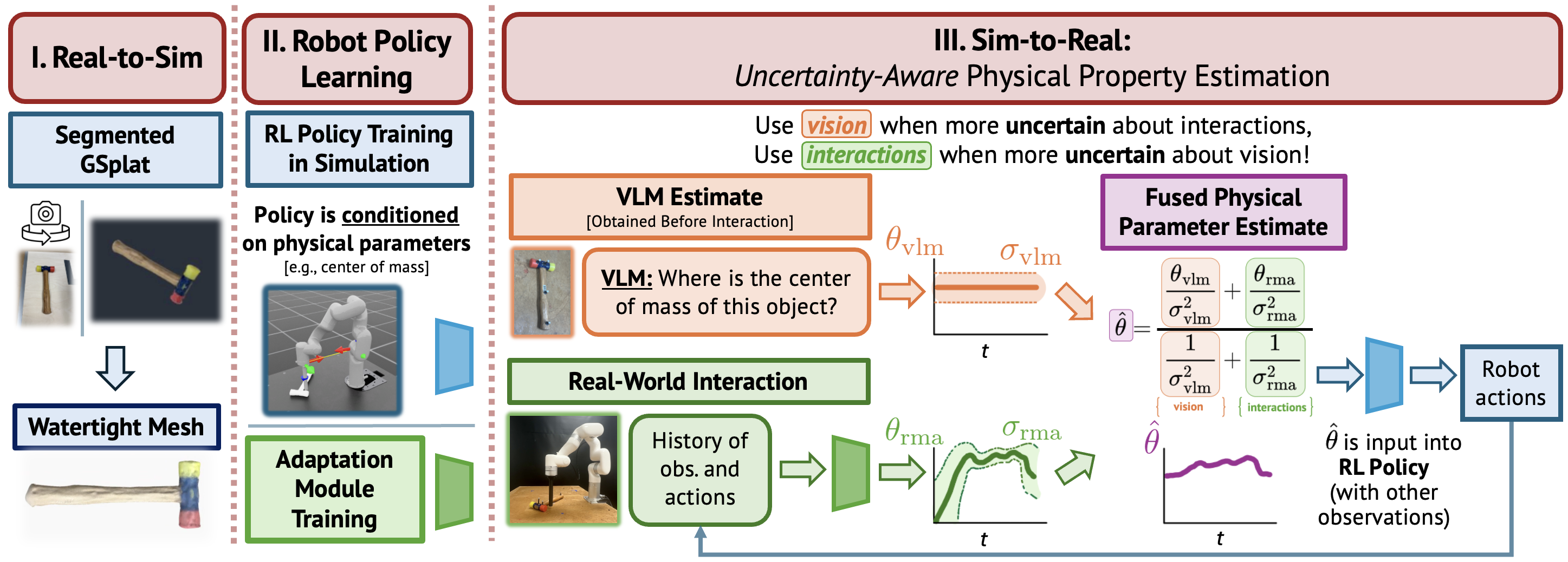

Phys2Real is a real-to-sim-to-real pipeline for robotic manipulation that combines VLM-based physical parameter estimation with interaction-based adaptation through uncertainty-aware fusion. It comprises three stages:

Learning robotic manipulation policies directly in the real world can be expensive and time-consuming. While reinforcement learning (RL) policies trained in simulation present a scalable alternative, effective sim-to-real transfer remains challenging, particularly for tasks that require precise dynamics. To address this, we propose Phys2Real, a real-to-sim-to-real RL pipeline that combines vision-language model (VLM)-inferred physical parameter estimates with interactive adaptation through uncertainty-aware fusion. Our approach consists of three core components: (1) high-fidelity geometric reconstruction with 3D Gaussian splatting, (2) VLM-inferred prior distributions over physical parameters, and (3) online physical parameter estimation from interaction data. Phys2Real conditions policies on interpretable physical parameters, refining VLM predictions with online estimates via ensemble-based uncertainty quantification. On planar pushing tasks of a T-block with varying center of mass (CoM) and a hammer with an off-center mass distribution, Phys2Real achieves substantial improvements over a domain randomization baseline: 100% vs 79% success rate for the bottom-weighted T-block, 57% vs 23% in the challenging top-weighted T-block, and 15% faster task completion for hammer pushing. Ablation studies indicate that the combination of VLM and interaction information is essential for success.

We fuse VLM priors with interactive online adaptation

to estimate physical parameters and improve sim-to-real manipulation.

Uncertainty-aware fusion of VLM priors and interaction

yields near-privileged sim-to-real performance on planar pushing.

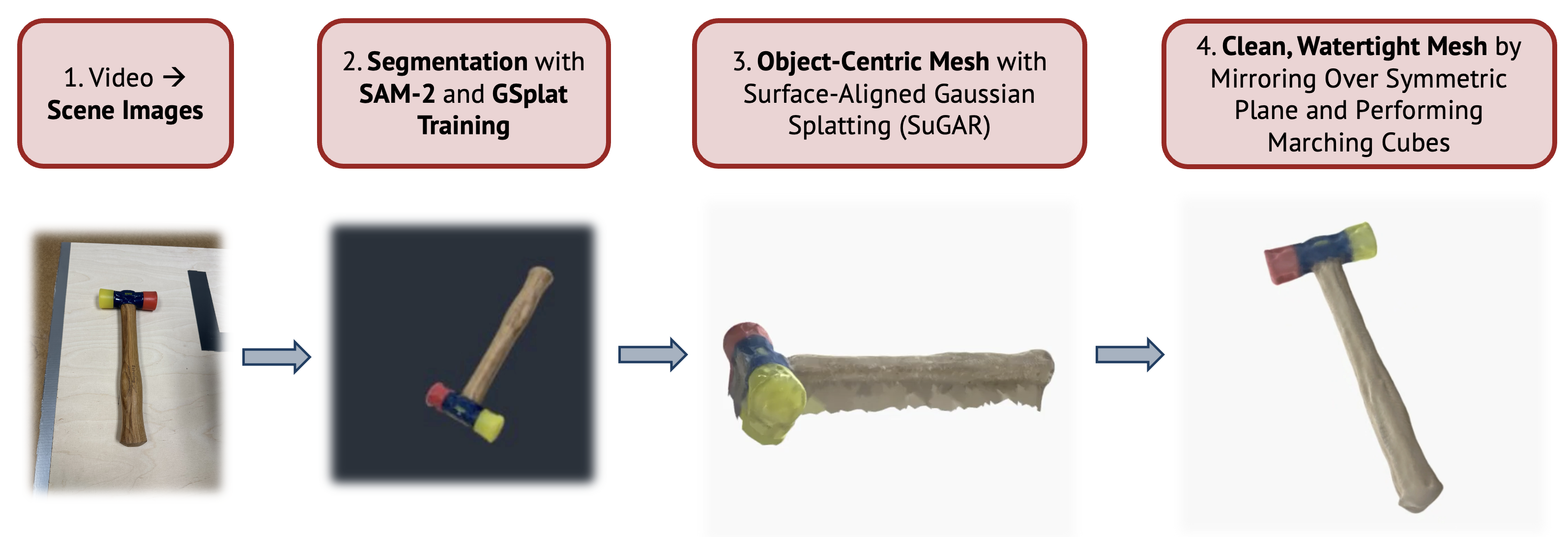

We reconstruct simulation-ready assets from real scenes: segmented 3D Gaussian splats are converted into watertight meshes. This "real-to-sim" step lets policies train on objects that capture geometry relevant to dynamics.

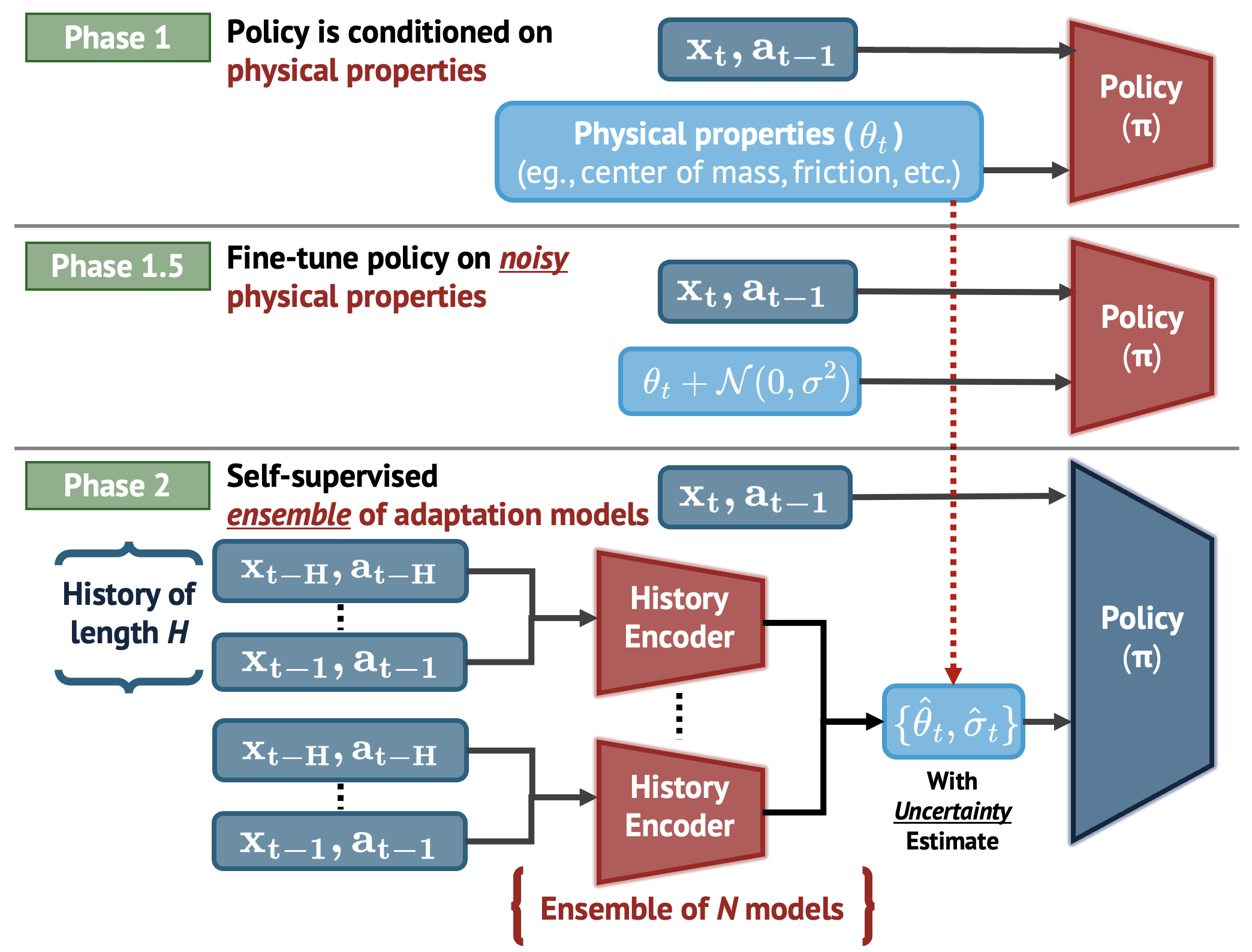

We train policies conditioned on interpretable physical parameters (e.g., the object's CoM). Training occurs in three phases: (1) privileged training with accurate parameters, (1.5) noise-aware fine-tuning for robustness, and (2) online adaptation via an uncertainty-aware ensemble that updates the parameter belief during execution.

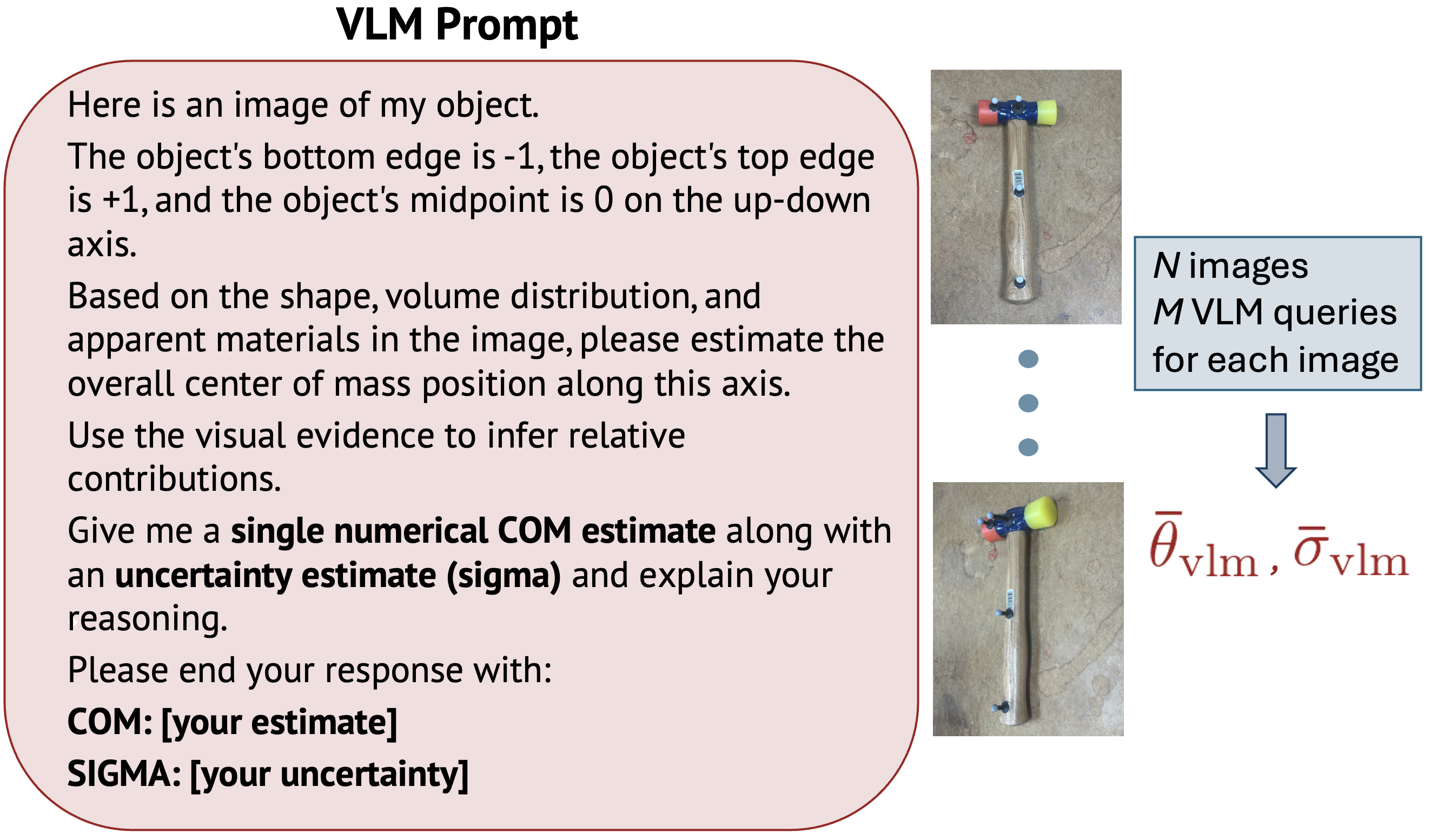

A vision-language model provides a prior distribution over the parameters (mean + uncertainty). During interaction, an RMA-style estimator refines this belief. We fuse them using their uncertainties so the policy sees an interpretable estimate that improves over time.

Condition the policy solely on VLM-estimated parameters, without interaction, to evaluate whether priors alone enable effective control.

Remove the VLM prior and use only interactive online adaptation.

Apply to image-reconstructed, mesh-free objects (e.g., hammer).

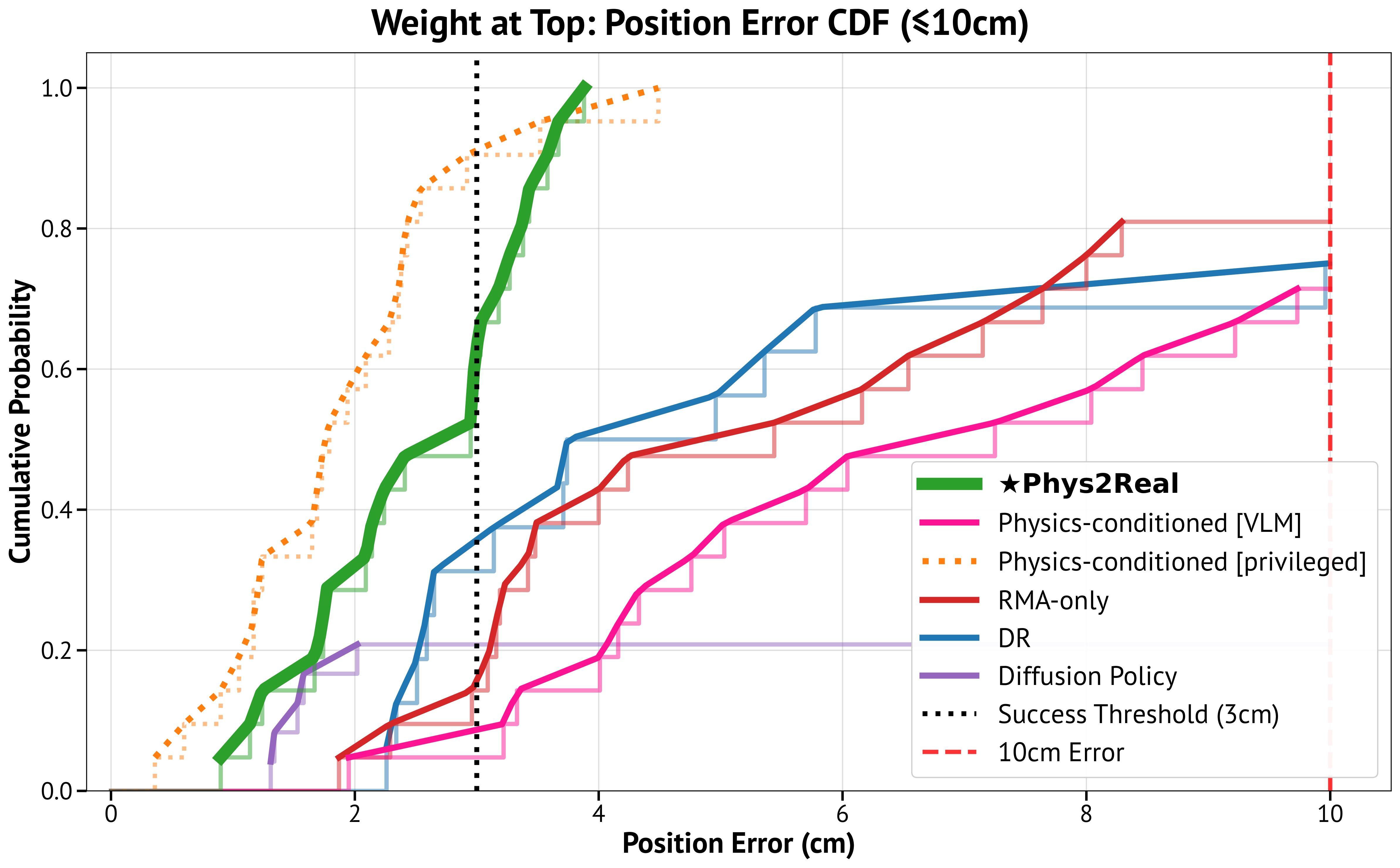

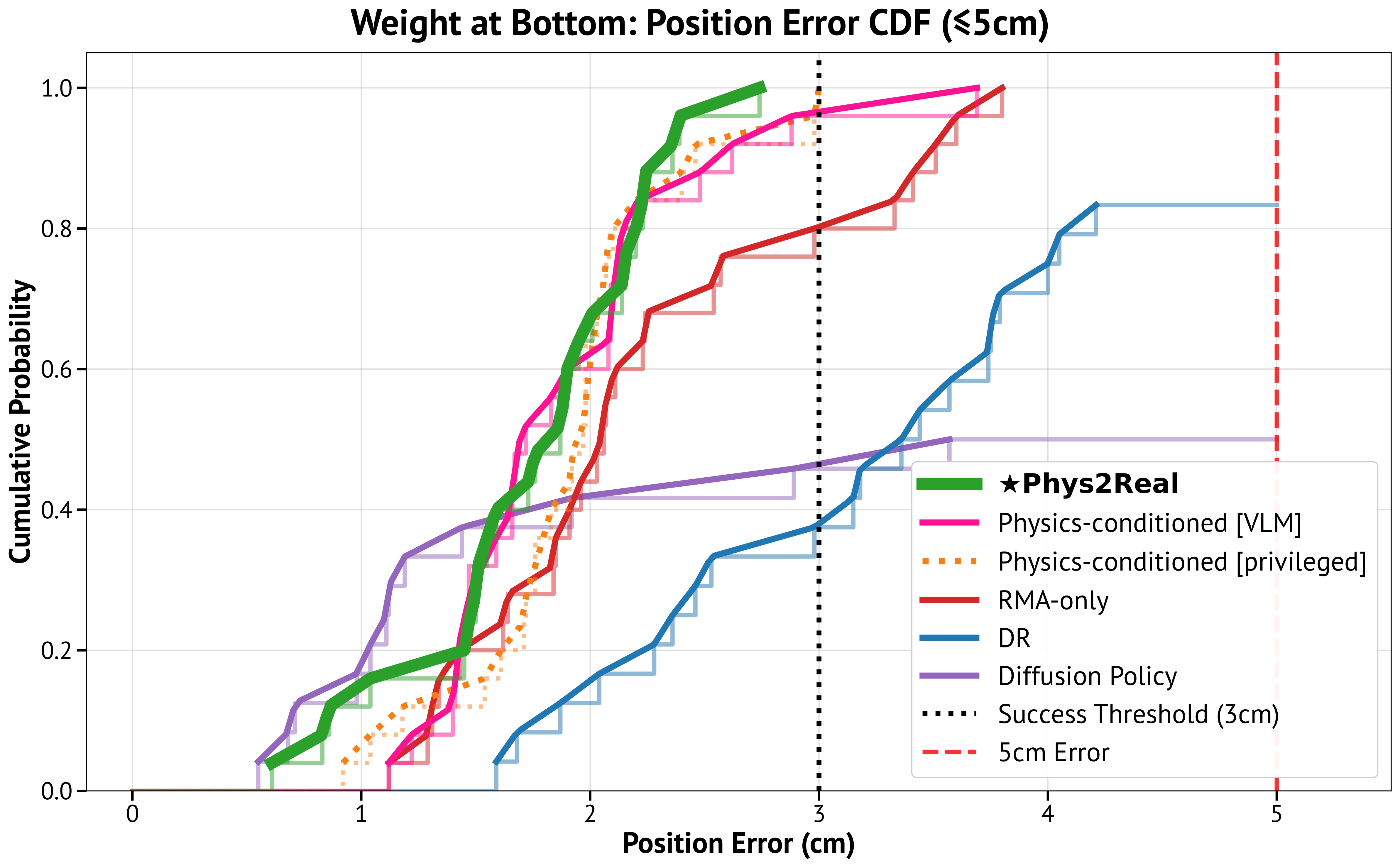

Q1–Q3 T-block pushing: Phys2Real consistently outperforms DR and diffusion. With only VLM priors + interaction, we approach privileged performance while staying interpretable.

CDF = fraction of trials below each position error. Higher & further left is better. Example: 0.8 at 2 cm ⇒ 80% of trials ≤ 2 cm.

Top weight: 24% (DR) → 57% (Phys2Real). Bottom weight: 79% → 100%.

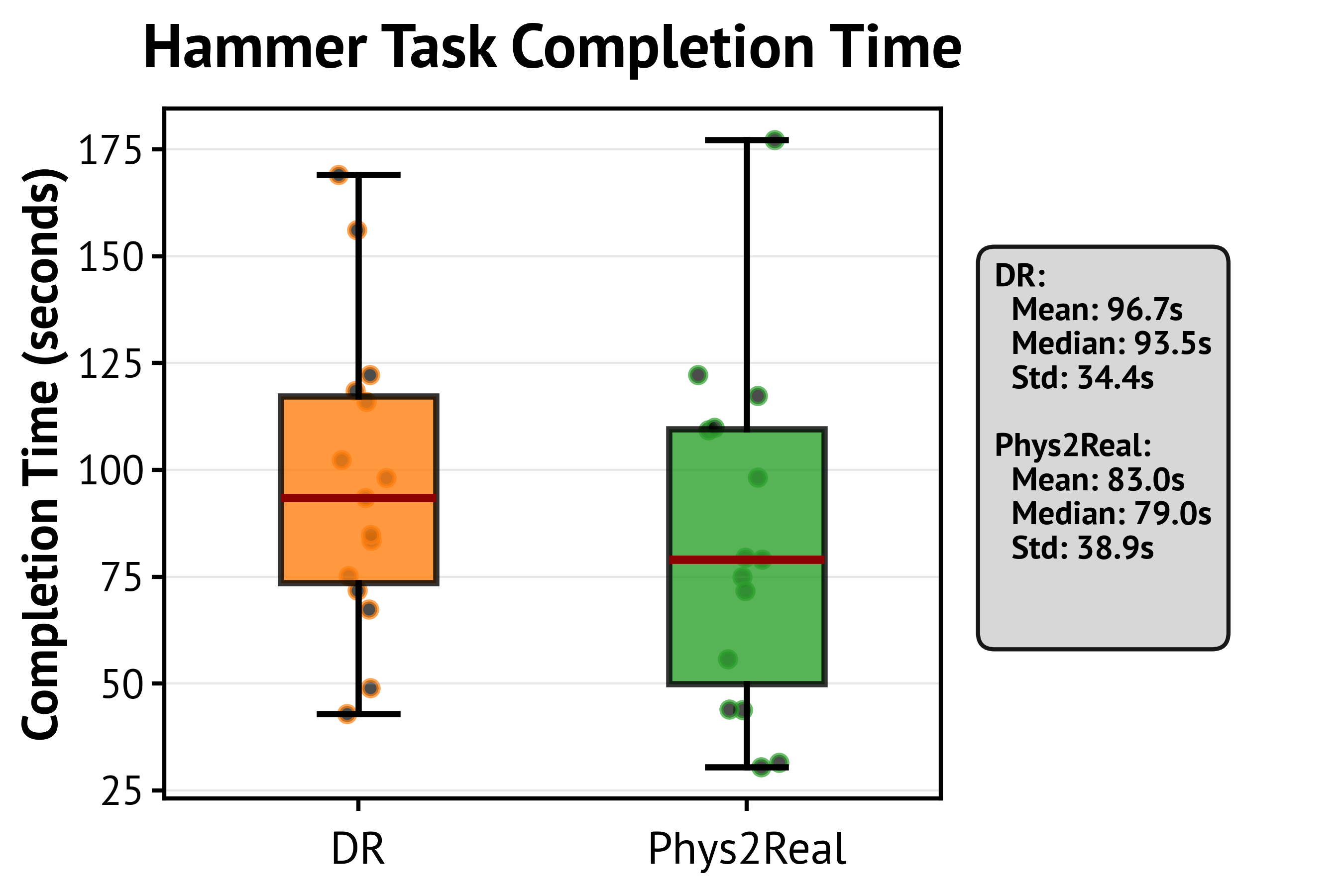

Q4 Hammer (reconstructed object): both methods succeed, but Phys2Real is ~16% faster to completion.

Phys2Real — faster completion

Domain Randomization (DR)

Vision provides a prior; interaction reduces uncertainty for robust sim-to-real control.

Early in each episode, the fused estimate relies on the VLM prior. As interaction accumulates and more contact information becomes available, the ensemble’s epistemic uncertainty shrinks, and the fused estimate converges toward the true physical parameters. This real-time adaptation aligns the policy’s internal physics estimates with the true environment, allowing more accurate and precise control.

Phys2Real shows that combining foundation model priors with interactive online adaptation yields physically grounded, uncertainty-aware policies that transfer more reliably to the real world. By treating large vision models as sources of physical intuition and refining those estimates through real interaction, we move toward robotic systems that can understand, predict, and adapt in diverse environments.

@article{wang2025phys2real,

title = {Phys2Real: Fusing VLM Priors with Interactive Online Adaptation for Uncertainty-Aware Sim-to-Real Manipulation},

author = {Wang, Maggie and Tian, Stephen and Swann, Aiden and Shorinwa, Ola and Wu, Jiajun and Schwager, Mac},

journal = {arXiv preprint arXiv:2510.11689},

year = {2025},

url = {https://arxiv.org/abs/2510.11689}

}